Deep Down Diving into Nodejs Lifecycle - Take Advantage of Nodejs Singlethread Architecture

As you may know, NodeJS is built with a special design that uses a single thread capable of handling non-blocking operations. This thread runs through a cycle called the event loop.

Even though the event loop is part of NodeJS's single thread, it leverages the system kernel to manage tasks and enables non-blocking I/O operations.

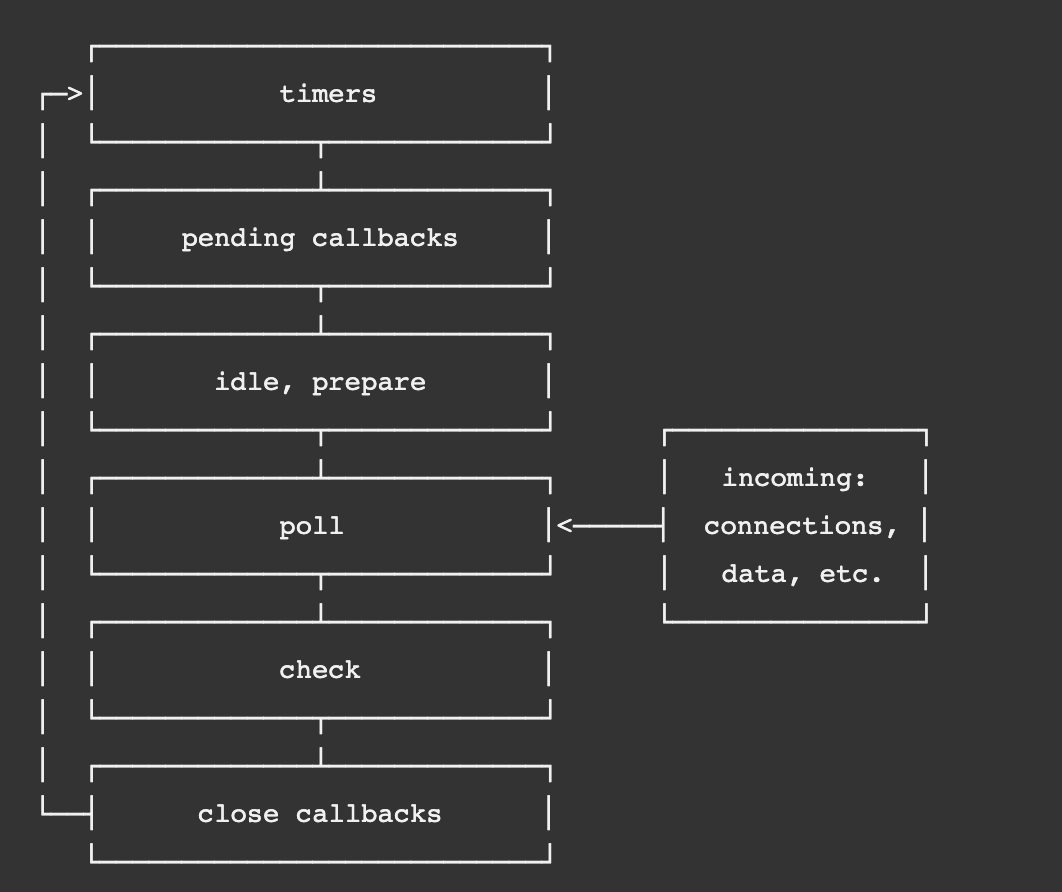

The diagram below shows the order or phases of the event loop and how the operations are handled.

- timers: this phase executes callbacks scheduled by

setTimeout()andsetInterval(). - pending callbacks: executes I/O callbacks deferred to the next loop iteration.

- idle, prepare: only used internally.

- poll: retrieve new I/O events; execute I/O related callbacks (almost all with the exception of close callbacks, the ones scheduled by timers, and

setImmediate()); node will - check:

setImmediate()callbacks are invoked here. - close callbacks: some close callbacks, e.g.

socket.on('close', ...).

So, despite common belief, NodeJS doesn't strictly work as a single thread. But, it's not exactly multithreaded either. Imagine we have a server with a CPU that supports 16 threads. Even though the event loop is well-designed, the processes are assigned to the task pool one by one through a single thread. This can lead to unwanted delays, especially when dealing with high traffic.

Imagine a pizza shop with 4 couriers and 1 order manager who takes and sends orders to the couriers. As more and more orders come in, the time it takes to notify the next order to the next courier gets delayed. This happens because, no matter how fast the manager works, manager can only handle one order at a time.

In this situation, NodeJS's single thread, which we can think of as the order manager, is like the event loop. And the 4 couriers represent the thread pool of NodeJS.

So, if we divide one(main) Node server into several parts, each running on different ports, and we do this up to the maximum supported thread count of the CPU in the system, and then we combine them all back into one port using a web server, wouldn't we be able to maximize the CPU performance? Let's find out.

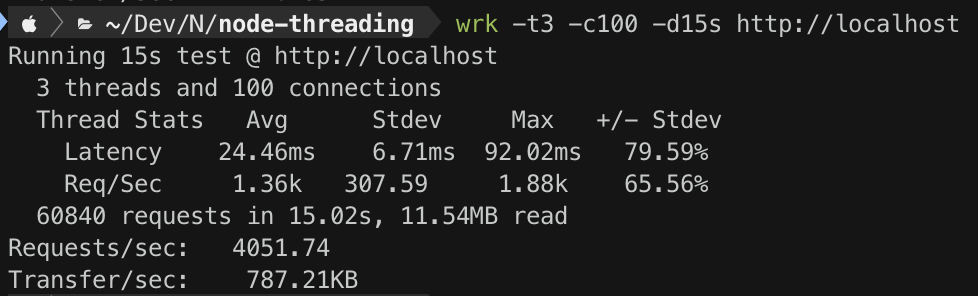

15 seconds of stress-test for 1-threaded NodeJS HTTP server, keeping 100 connections open over 3 threads:

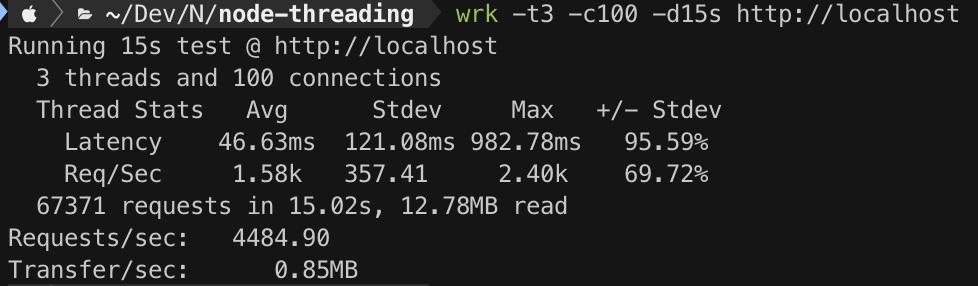

15 seconds of stress-test for 4-threaded NodeJS HTTP server, keeping 100 connections open over 3 threads:

In the benchmarks mentioned earlier, the performance difference between a 4x Node server and a 1x Node server is approximately 9.65%. Which is significant improvement that is worth considering.

There are various ways to apply this technique, depending on the situation. I chose to use node + pm2 and nginx in docker containers. As an example, I created a simple repository to refer to this topic. You can find it here: node-max-threaded

Source references:

- for event-loop diagram: Official Node Docs